By Dr. Randall Hill, Vice Dean, Viterbi School of Engineering, Omar B. Milligan Professor in Computer Science (Games and Interactive Media), Executive Director, ICT

Dr. Randall W. Hill is the Executive Director of the USC Institute for Creative Technologies, Vice Dean of the Viterbi School of Engineering, and was recently named the Omar B. Milligan Professor in Computer Science (Games and Interactive Media). After graduating from the United States Military Academy at West Point, Randy served as a commissioned officer in the U.S. Army, with assignments in field artillery and military intelligence, before earning his Masters and PhD in Computer Science from USC. He worked at NASA JPL in the Deep Space Network Advanced Technology Program, before joining the USC Information Sciences Institute to pursue models of human behavior and decision-making for real-time simulation environments. This research brought him to ICT as a Senior Scientist in 2000, and he became promoted to Executive Director in 2006. Dr. Hill is a member of the American Association for Artificial Intelligence and has authored over 80 technical publications.

ORIGIN STORY

In 1996, an extraordinary workshop took place about forty miles south of Los Angeles, in Irvine. It was called Modeling and Simulation: Linking Entertainment and Defense and had been set up by the National Research Council. About sixty people had been invited. Half the room was from the entertainment industry. Everyone else was from across Academia and the branches of the Department of Defense.

Why had the National Research Council brought everyone together? Well, the Department of Defense had been watching the emergence of immersive and interactive entertainment for some time and wondering how they could take advantage of those skills to create solutions for the military. Back in Irvine, the presentations sparked something. The conversation became animated as they found common ground, and connection. Much of that connection came from science fiction-turning-fact. It certainly didn’t hurt that several people in the room came from Paramount Studios, and worked on Star Trek – as many in the military are die-hard Trekkies.

After the event, the NRC produced a set of academic papers, and then published them as a book. It could have been the end of that, but it wasn’t. People with influence on both sides wanted to make sure something came out of it. The DOD Director, Defense Research and Engineering, Anita Jones, and the Army’s Chief Scientist, Mike Andrews, decided to form a University Affiliated Research Center, known as a UARC to implement a collaboration. The University of Southern California won the competition for 6th UARC and they named it the Institute for Creative Technologies.

When USC took on the UARC, it was decided to locate it off the academic campus. Instead, the original founders leased a building in Marina del Rey and the production designer, Herman F. Zimmerman, famous for his work on Star Trek, got to work, creating highly imaginative interiors for the fledgling institute. It looked like the interior of a starship, complete with bulkheads and an automatic door in the front of the building that opened with a “whoosh.” The legend goes that Zimmerman used his crew and Paramount soundstage to mock up and construct everything using Hollywood set design techniques and essentially over a weekend they installed the interiors.

The first staffers came from Disney, Paramount, across USC, and other academic institutions. It was both a visionary and highly experimental group of talented individuals.

Finally, in August 1999, ICT opened its doors.

Today, 25 years later, ICT is a fully-fledged lab with academics working alongside creatives and makers. We build concepts, demos and prototypes which are cost-effective, agile and adaptive. Through our relationships with industry and branches of the Department of Defense, we get access to next generation government data sets to advance human computer interaction.

Because we are based in L.A. we draw on regional strengths, with our proximity to not only Hollywood, but aerospace, strategic military bases including the National Training Center, Camp Pendleton and the Navy’s China Lake, as well as the technology industry in Silicon Beach.

THE MANDATE

Let’s back up: what was the original mandate for ICT? Officially, it was to use entertainment and game industry skills to make military training better. To create immersive training systems which improve decision-making, build better leaders, and support the acquisition of other foundational skills. ICT was tasked with exploring emerging technologies, such as virtual reality, with a vision to create synthetic and adaptive experiences that were so compelling that participants responded as if they were real.

It sounds like science fiction, and, back then, it was. Let’s not forget, this was before smartphones, or commercially-available head mounted displays, and cloud-computing was in its nascent stage. We were living through an AI winter. Quantum computing was but a fever-dream.

Everyone had big ideas and there were several grand plans. But our Army sponsors knew exactly what they wanted. They said our mission if we chose to accept it was this: “Go build the holodeck!”

BUILDING THE HOLODECK

In the past almost 25 years, through both basic and applied research, we have done exactly that. We have compiled almost all of the components of a holodeck, with the ability to render virtual environments in animation and sound. As in the fictional holodeck, have created photo-real computer graphics and virtual environments with intelligent agents.

Virtual humans are used throughout our simulations, acting as coaches to practice and refine crucial skills. These platforms are rich in data, and complexity, with natural language generation, speech synthesis, modeling emotion and multi-modal sensing, drawing on learning sciences to reflect how people attain and retain knowledge.

Importantly, all our work stems from a deep understanding of story, the craft of narrative, interaction and a compelling arc. ICT’s simulations are effective tools because they draw the user in, and keep them engaged. As human beings we are hard-wired to remember stories, and this is a core competency of our work at ICT.

Stories are central to the holodeck concept. Relying on narrative, the holodeck was a way for the Star Trek crew to step outside of their day-to-day duties and either kick back and relax, or detour into fantasy worlds in order to cope with the existential realities of hurtling through space at 6000 times the speed of light.

When the Army tasked ICT with creating holodeck-style experiences, it was for tactical training purposes, rather than downtime rec-room applications. But virtual training requires narrative because, without story, the imagination is not ignited. All of our work, therefore, centers on story – and has done since day one.

MISSION REHEARSAL EXERCISE

Our first large-scale mixed reality, holodeck-style experimentation, was in 2000-2001. It was called the Mission Rehearsal Exercise, or MRE for short. Several academic papers came out of this work, and contributed to its success, including Toward the Holodeck: Integrating Graphics, Sound, Character and Story. It was a massive undertaking, in terms of both R&D, and a blended physical/digital build. Sixteen academics at the top of their game in computer graphics, sound design, speech recognition, narrative structure, learning sciences, and mixed reality, contributed to the paper, and almost fifty staffers worked on the final output, which took up an entire room in ICT’s original building in Marina del Rey.

MRE was also a significant cross-departmental undertaking. ICT was less than two years old when MRE was launched. So, to make it happen, we collaborated with the USC Integrated Media Systems Center, as well as USC Information Services Institute, known as ISI. You may know of ISI as it was founded in 1972 and its researchers worked with the federal government to create the underlying technologies that power the Internet.

What were we trying to build with MRE? Well, here’s a direct quote from that paper’s abstract: “We describe an initial prototype of a holodeck-like environment that we have created for the Mission Rehearsal Exercise Project. The goal of the project is to create an experience learning system where the participants are immersed in an environment where they can encounter the sights, sounds, and circumstances of real-world scenarios. Virtual humans act as characters and coaches in an interactive story with pedagogical goals.”

Here’s what the MRE experience was like: You’d walk into a large dark room and wait. Then we’d power up a movie theater size screen, which was 8 feet tall and 31 feet wide, and curved, to give a sense of immersion, wrapping around two walls, essentially life-size. As you look around, you realize you’re inside a Bosnian village, during the height of the Balkans conflict. Just as you’re peering at the 3-D computer simulations, enhanced by texture mapping, examining its fidelity, autonomous agents, embodied AIs in the form of U.S. Army soldiers, rush onto the screen and start communicating with you. The immersion is enhanced by 12 speakers providing directional sound and multiple 64-track effects. There’s suddenly a lot going on.

Any hesitation you might have would be dispelled within seconds, especially if you were a soldier, as this scenario is something you’d trained for. You’re in hostile territory, and there’s a problem. An American Humvee has accidentally struck and injured a local child, who is lying on the ground in front of you, being tended to by a medic. An angry crowd is forming across the street. A TV crew is on the way. Meanwhile a weapons inspection team is being threatened by villagers, angry at the intrusion of foreign forces. Your virtual sergeant stands before you, waiting for your orders. What are you going to do first to stop any of these issues escalating? That’s what the characters on screen want to know. How are you going to help? What is your decision, lieutenant?

Technically, some of the agents are basic programs, working on pre-scripted, routine behaviors, but others, playing key roles, are entirely responsive – to you. Plus, in Army lingo, you’re also going to face an AAR afterwards – an After-Action Review. Yes, you’re being watched, and there will be feedback. This took experiential learning to a whole new level. We showed the Mission Rehearsal Exercise to hundreds of Army personnel who found it to be a compelling new way to train leaders and soldiers. This award-winning system put ICT on the map and we knew we were going in the right direction. We had the beginnings of our research and development into simulated environments using advanced technologies.

However, to put it in context, that was 23 years ago. Imagine how far we have come since then.

MRE proved the power of story. What drives our work here at ICT is going beyond the passive experience of entertainment, and into something entirely uncharted. We are focused on exploring how to use narrative within immersive environments, utilizing natural language understanding, and dynamic A.I. agents.

Which brings us back to the holodeck concept again.

VISION AND GRAPHICS LAB

The success of the Mission Rehearsal Exercise highlighted the need to investigate, and in many cases, invent the component technologies that would advance holodeck-type capabilities, including development of virtual humans.

Our Vision and Graphics lab is dedicated to advancing human digitalization. Virtual characters — digitally generated humans that can speak, move and interact — are essential for entertainment, training and education systems. The central goal of ICT’s Vision & Graphics Lab is to make these characters look realistic, including lighting them convincingly. To achieve this level of fidelity, VGL uses our Academy Award-winning Light Stage, which was developed in-house at ICT, to capture and process production-quality assets. VGL collaborates with film industry companies, such as Sony Pictures Imageworks and WETA Digital.

The Vision and Graphics Lab has even worked with the Smithsonian Institution to create a 3D printed bust of the president in 2014. This was accomplished by building a portable version of the Light Stage that was shipped and re-assembled in the White House, where President Obama sat for a scan. The Presidential Light Stage is on display in our ICT lobby on the first floor. The Obama bust now sits in one of the Smithsonian’s museums.

With a focus on avatar creation, VGL is constantly striving to improve the quality and fidelity of our work. To achieve this, our researchers are always exploring new technologies and updating our processing pipeline, including incorporating AI into its workflow. The lab has collected an extensive database of faces, which provides the foundation for ICT’s morphable face model now being used by a number of organizations. Collectively, these AI-powered tools enable rapid data capturing and processing, personalized avatar creation, and physically-based avatar rendering.

VGL’s mission is to make movie-quality avatars accessible to all communities through a digital human platform that enables low-cost creation, editing, and rendering. In addition to its work in avatar creation, VGL is also developing new representations and approaches for dynamic human capture. This will allow real-time performance capture for VR/AR applications, opening up a whole new world of possibilities for digital human interaction.

The VGL Light Stage has been recognized with two Scientific and Technical Awards from the Academy of Motion Pictures, and its technology has been used in 49 movies. It partners with industry leaders such as Nvidia, Meta, and Digital Domain to advance the development of avatar technologies. Moreover, the publication of over 160 top-tier academic papers has played a pioneering role and had a significant impact in this field.

Dr. Yajie Zhao and her team are now expanding their research scope to include scene/terrain understanding, reconstruction, and interaction. VGL’s ultimate goal is that digital humans should be able to play and interact with the world we live in.

HOLODECK MODULES

Let’s go further into the components of a holodeck and map our current research onto those elements including: computing methodologies including AI, computer graphics, interfaces, virtual, augmented and mixed reality, plus human-centered computing and HCI – human computer interaction.

HUMAN-INSPIRED ADAPTIVE TEAMING SYSTEMS

At ICT we have 15 Labs, which take a deep dive into all of these areas. Our most recent Lab is called Human-inspired Adaptive Teaming Systems. This is headed up by research scientist Dr. Volkan Ustun.

Building directly on the idea of the autonomous agents described above within the Mission Rehearsal Exercise, Dr. Ustun, and his research team, are focused on enhancing the quality and complexity of non-player characters in training simulations.

Augmenting Multi-agent Reinforcement Learning models, and drawing inspiration from operations research, human judgment and decision-making, game theory, and cognitive architectures, this Lab has successfully created proof-of-concept behavior models that behave optimally and intelligently in a variety of tactical scenarios. Importantly, these dynamic agents are doctrine-based, and understand military hierarchies and policies.

Essentially, Dr. Ustun’s lab has built AIs with a mind of their own, which remain within appropriate boundaries, as per their perceived rank and duties, and can perform tasks within virtual environments.

GEOSPATIAL TERRAIN

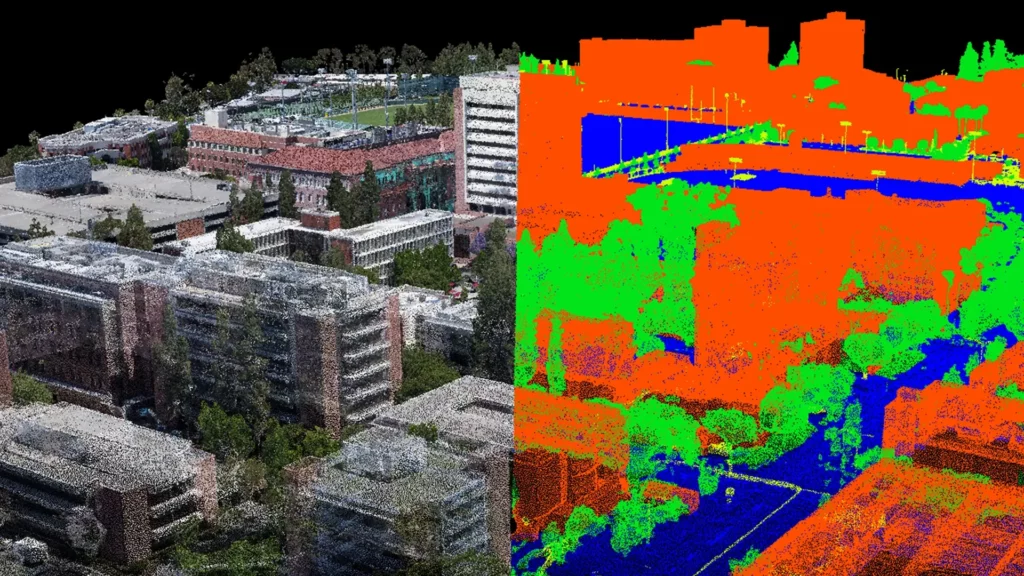

Speaking of environments, this is where our Geospatial Terrain team comes in, founded by Ryan McAlinden and now headed up by Dr. Andrew Feng. We have made significant progress in the last ten years in this area. Dr. Andrew Feng’s team focuses on constructing high-resolution 3D geospatial databases for use in next-generation simulations and virtual environments.

Utilizing both commercial photogrammetric solutions and our in-house R&D processing pipeline, 3D terrain is procedurally re-created from images captured by drone platforms with electro-optical sensors. The ultimate goal of the Terrain team is to automate the process of high-resolution terrain re-construction and convert it into a simulation capability for Military users.

To achieve this aim, they have established an end-to-end pipeline that efficiently goes from data capture, to 3D processing, and semantic segmentation to produce simulation-ready high-resolution terrain. What formerly took months and tedious manual processing can now be achieved automatically in hours.

To date, ICT has produced 3D terrains through the UAV collections of over a hundred different sites to support the terrain research efforts for the DoD. Our technology is also an integral part of Army’s One World Server (OWS) system, which utilizes our semantic terrain processing algorithms for creating and processing simulation-ready high-resolution 3D terrain from low-altitude UAV collections. Our Geospatial Lab has also developed terrain simulation applications including line-of-sight analysis, path finding, real-time terrain effects, concealment analysis, and so on.

It is now looking to address the current limitation of the photogrammetry process for creating 3D terrains. Specifically, to develop the capability for photorealistic reconstruction and visualization of the geospatial terrain using neural rendering techniques.

DIVIS

In Star Trek, the holodeck was also used for scientific training or role-play exercises. Here at ICT, we do the same.

In 2019, in close cooperation with the United States Army Sexual Harassment/Assault Response and Prevention (SHARP) Academy at Fort Leavenworth, ICT created the Digital Interactive Victim Intake Simulation (DIVIS), a standardized, simulated and interactive victim-intake interview practice experience. This interdisciplinary project leverages research from ICT’s Natural Language, Perception, Learning Sciences and Mixed-Reality teams, building on our earlier work with DS2A.

This system enables Sexual Assault Response Coordinator (SARC) and Victim Advocate (VA) students to practice with a digital-based victim in realistic and highly emotional scenarios, similar to role-playing. This training application leverages existing research technology to help cultivate interpersonal communication skills, like rapport building and active listening, and sets out to improve upon classroom instruction by providing an engaging and interactive experience with a digital victim.

SARC and VA trainees are able to use natural language to conduct an intake interview with a “Digital Victim”; the session is recorded, and a semi-autonomous After-Action Review provides logged playback of the student’s verbal and non-verbal actions for facilitator lead assessment.

The beta system, consisting of two Digital Victims, is currently being used at Fort Leavenworth as part of their 6-week SARC training program. ICT has procured a sustainment contract to continue to maintain and improve upon the existing system.

Next steps with DIVIS include the addition of two new Digital Victims to expand reach in terms of both diversity and narrative style. ICT is currently working with the Army SHARP Academy to support a path toward transitioning DIVIS as a training device for additional installations.

BRAVEMIND

There are many traumas which can result from active service in the military. Again, using a holodeck lens, we’ve explored many ways to use immersive technologies to help heal those who suffer.

Some of our projects looked at full-scale room-sized installations – as with the Mission Rehearsal Exercise – but, as the technologies to power head-mounted devices improved and became more widely disseminated, we started working on VR projects too.

Also, as an aside, having one holodeck per spaceship on Star Trek was a pretty expensive undertaking. The military today requires us to be more cost-effective with our technologies.

At ICT, we have pushed the holodeck concept from a single fixed environment into a digital experience through VR, in order to disseminate this technology widely.

BRAVEMIND is ICT’s virtual reality (VR) exposure therapy system which has been shown to produce a meaningful reduction in PTSD symptoms in multiple clinical trials across VA, DoD, and University-based clinical test sites. Exposure therapy, in which a patient – guided by a trained therapist – confronts and reprocesses their trauma memories through a retelling of the experience, has been endorsed as an “evidence-based” treatment for PTSD.

ICT researchers pioneered leveraging virtual reality (VR) to extend this treatment, enabling patients to experience a scenario within a virtual world, under very safe and controlled conditions. Most recently, in the largest randomized clinical trial to date BRAVEMIND was found to be equivalent to the best evidence-based PTSD treatment approach (Prolonged Exposure), and produced better outcomes in patients with co-morbid major depression. VR exposure therapy was also found to be preferred over traditional therapy by 76% of incoming patients in this trial.

In addition to the visual stimuli presented in the VR head-mounted display, directional 3D audio, vibrations and smells are delivered into the simulation to provide enhanced fidelity of recall. Specially-trained clinicians control the stimulus presentation via a separate “Wizard of Oz” interface, and are in full audio contact with the patient at all times.

BRAVEMIND is a platform that can be adapted to deliver a variety of VR-based treatment scenarios and can be used to treat PTSD due to other non-combat trauma experiences. The original application consisted of a series of graphical renderings of Afghan and Iraqi city, village, mountain, and desert road environments, as well as scenarios relevant to combat medics.

The most recent iteration of BRAVEMIND was developed for survivors displaced by the war in Ukraine as a virtual metaverse space to provide a place to gather, share stories, and receive social support.

BRAVEMIND has been distributed to over 170 clinical sites and, through a partnership with the SoldierStrong Foundation, now distributes the equipment and clinical training needed to establish a treatment site at VA medical centers and other sites that serve the needs of our Veterans, including Puget Sound VA, and Emory University.

BATTLE BUDDY

We are now developing new prototypes which can run on a mobile device, as not everyone has access to a head-mounted device. One of these projects is called Battle Buddy. The U.S. Department of Veterans Affairs’ Mission Daybreak Challenge is a 10-year strategy to end Veteran suicide through a comprehensive, public health approach. In response to a call for proposals in 2022, ICT’s MedVR and Integrated Virtual Humans teams proposed Battle Buddy for Suicide Prevention, an AI-driven mobile health (mHealth) application tailored exclusively for Veterans.

The virtual human (VH) component of Battle Buddy is a computer-based dialogue system with virtual embodiment, utilizing various multi-modal language cues such as text, speech, animated facial expressions, and gestures to interact with users. Inspired by the U.S. military practice of assigning fellow soldiers as partners to provide mutual assistance in both combat and non-combat situations, the name “Battle Buddy’’ symbolizes this app’s mission.

Battle Buddy’s comprehensive approach aims to establish a suicide prevention ecosystem that can be customized to meet the unique needs of individual users. Battle Buddy not only provides valuable in-app health and wellness content, it also acts as a springboard to real-world support networks, including friends, family, and various resources provided by the Department of Veterans Affairs (VA) and the Veterans Crisis Line (VCL). Veterans will also be able to opt-in to connect their wearable devices (sleep sensors, exercise trackers, etc.) to gain a better understanding, and develop agency, towards their body/mind holistic health.

In the event of a suicidal crisis, Battle Buddy’s primary focus shifts to guiding Veterans through their personalized safety plan, with the goal of creating time and space between suicidal thoughts and actions. By doing so, the app aims to play a crucial role in saving lives and providing the necessary support during critical moments.

Battle Buddy for Suicide Prevention is currently in development employing a user-centered design process to include Veterans in every step of the way. An early prototype received the award for Best Technical Demonstration at the International Conference for Persuasive Technology 2023, a top interdisciplinary conference focused on work at the intersection of computer science and psychology.

TRANSITIONS

As you can see, ICT is dedicated to building experiences that matter, whether it’s creating an R&D testbed to accelerate DoD simulation technologies, recording and displaying testimony in a way that will continue the dialogue between Holocaust survivors and learners far into the future, or developing virtual reality exposure therapy for veterans with post-traumatic stress. Since 1999, ICT’s projects have trained, helped and/or supported well over 250,000 service members and civilians.

Measuring impact is vital to our success. We are not just a test-bed for holodeck-style elements. Many of our projects go on to find homes within industry, military or the education field. Many ICT projects have become POR (Programs of Record) including DisasterSim; One World Terrain; JFETS; Mobile C-IED Trainer (MCIT), Tactical Questioning IEWTPT; ELECT-BiLat and UrbanSim (Games for Training POR).

Another of our projects has found a permanent home within the Army. This is called ELITE, and is ICT’s instructional platform, delivered via digital devices, which provides opportunities to practice skills in realistic and relevant training scenarios with virtual human role-players and real-time data tracking tools which allow for structured feedback.

ELITE leverages more than a decade of USC Institute for Creative Technologies’ research into instructional design, and virtual human technologies. Thousands of personnel have been trained using this system and it is now a prerequisite for attending Army Warrant Officer School.

Over 50 licenses have been awarded to our Rapid Integration and Development Environment, known as RIDE, including the Office of Naval Research and Lockheed Martin.

But our support of the warfighter doesn’t stop with training – ICT R&D has been operationalized. One World Terrain, a Joint Staff-funded project designed to assist the DoD in creating the most realistic, accurate and informative representations of the physical and non-physical landscape, began at the institute as an R&D effort alongside the National Simulation Center. It later transitioned to Army Futures Command and, in 2021, was employed by the 82nd Airborne Division to support the mass airlift from Kabul, Afghanistan.

FORCE WRITERS ROOM

Our most recent success at blending narrative entertainment industry skills with futuristic technologies is SeaStrike 2043, an 11-minute high production value short film commissioned by the US Navy. This was incubated within our Force Writers Room, which uses Hollywood-style techniques to envisage and reimagine the future, and is a contractor to the warfighter development directorate.

ICT’s Forces Writers Room (FWR) offers a new way to frame and solve future-centered Department of Defense problems, and prepare warfighters to develop appropriate defensive systems. Protecting the United States is a profoundly complex task. Not only must military and homeland security defend against known threats, they must also prepare for a vast array of “unknowns.” They must visualize how advancements in technology, biology and social dynamics will impact warfare and where threats may come from, as well as identify serious issues which could bring down democracy.

FWR brings multi-disciplinary expertise, Hollywood creativity, and proven “TV writers room” techniques to visualize the future, enabling warfighters to examine “unknowns” within a highly imaginative narrative framework. FWR is co-led by ICT’s Dava Casoni, who worked as an actor in Hollywood, and also served in the Army Reserve in transportation and logistics, and Captain Ric Arthur, TV writer (NCIS as Richard C. Arthur; The Last Ship, Hawaii Five-O) and Naval officer who originated and piloted the FWR process.

SeaStrike 2043 is a powerful live action movie which demonstrates the role of emerging technologies, concepts and geopolitical conditions on DOD operations, providing decision-makers, warfighters, engineers, and scientists with a tangible look and feel of the future. SeaStrike is a plausible narrative set in the not too distant future, and use cases, delivering a context within which application of emerging technologies can be sandboxed.

SHIPSIM

Another ICT project, now underway, which utilizes narrative, learning sciences and simulation, is our advanced ShipSim, which probably comes closest to a holodeck concept in scale and ambition.

The Military and Sealift Command fleet routinely operates in international waters worldwide to safeguard freedom of navigation. This has led to adversary countries carrying out dangerous maneuvers to disrupt and prohibit these freedom of navigation operations. There was an urgent need to develop a physics-based simulation environment that supports swarm modeling for maximizing throughput in port operations while maintaining large-scale vessel movements as well as allow safe operations in congested conflict areas.

The Army requires a Multi-Domain Operations (MDO) ready force that can seamlessly transition from one domain to the other, such as those during maritime operations. ShipSim is a collaborative effort between the Institute for Creative Technologies and other faculty within the Viterbi School of Engineering, working in a cooperative agreement with ERDC’s Coastal and Hydraulics Laboratory (CHL). It will foster a leap forward in hyper-realistic ship motion using improvements in numerical simulations of vessel motion, Information Technology (IT), as well as computational speeds to enable the physics-based and real-time simulation of these interactions.

ICT BY THE NUMBERS

Those are some of the holodeck-style concepts which illustrate what ICT has achieved since 1999. If you are just looking for the facts, here are a few highlights to leave you with:

We are now up to 177 staffers and students – including two medical doctors and 31 PhDs, many of whom are also faculty within the USC Viterbi School of Engineering.

ICT has received many accolades in the past 24 years, including two science and technology Academy Awards. We have produced more than 2,000 Peer-Reviewed Publications with 100,000+ Citations. Our faculty have received over 140 Honors and Awards. Our research has garnered 278 Intellectual Property Disclosures and 29 Patents. We have 3 AAAI Fellows.

Finally, we are committed to encouraging and inspiring the next generation.

Last year our strong intern program had participants from 19 universities, as well as cadets from the Air Force Academy and United States Military Academy at West Point. It’s a lively scene, which we relish. The influx of new ideas and a multi-generation and cultural exchange is what keeps us vibrant, and at the top of our game, as we build out more holodeck-style initiatives for the future.

This is adapted from a speech given by Dr. Randall Hill, Executive Director, ICT at IEEE AIxVR 2024.