Human-inspired Adaptive Teaming Systems (HATS)

Research Lead: Volkan Ustun

-

Synthetic entities in current military simulations (e.g., OneSAF) have limited behaviors and are usually generated by rule-based/reactive computational models, which have minimal intelligence and cannot adapt based on experience. Effective synthetic entities need human-inspired adaptive cognitive models that communicate, perceive their environment and others in it, draw conclusions, reason, and choose appropriate actions. Experiential learning is essential for developing such synthetic characters with credible behavior because attempting to achieve realistic and convincing behavior solely through strict behavioral control would be highly impractical.

At the HATS Lab, our mission is to build autonomous synthetic characters or Non-Player Characters (NPCs) that are not only aware of human trainees’ needs but also capable of providing realistic and challenging learning experiences in military training simulations. Our approach augments Multi-agent Reinforcement Learning (MARL) models with GNNs (Graph Neural Networks), drawing inspiration from a wide range of disciplines, including operations research, human judgment and decision-making, game theory, graph theory, and cognitive architectures. This interdisciplinary approach allows us to better address the complex challenges in this field, keeping us at the forefront of research and development.

Our group historically leveraged cognitive architectures and developed the Sigma Cognitive Architecture to devise computational minds for synthetic characters. More recently, we have broadened our focus to devise interactive decision support systems for interactive simulation environments to aid commanders in gaining decision advantage by generating courses of action and proposing tactics and behaviors. In this shift, we have heavily incorporated machine learning approaches, primarily multi-agent reinforcement learning, in our group.

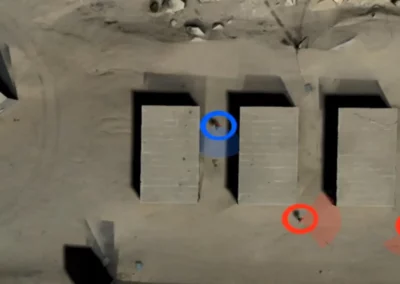

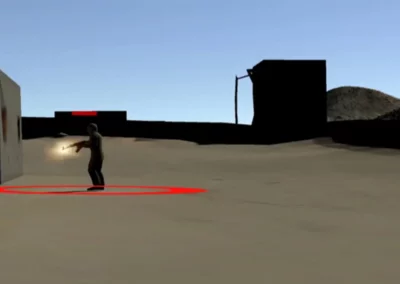

To date, we have successfully created proof-of-concept behavior models for various military training scenarios, leveraging our enhanced MARL framework. We utilize geo-specific terrains in Unity and abstractions derived from these terrains as simulation environments. Our current research focuses on incorporating domain knowledge, such as tactics, military hierarchies, etc., to enhance and speed up our MARL experiments. Our sample simulation environments and enabling libraries for running MARL experiments can be found at our group’s public repository [https://github.com/HATS-ICT]