Research Experience for Undergraduates (REU)

Our REU site is suspended for summer 2025.

Please visit us in December to apply for summer 2026.

The Institute for Creative Technologies (ICT) offers a 10-week summer research program for undergraduates in intelligent interactive experiences. A multidisciplinary research institute affiliated with the University of Southern California, the ICT was established in 1999 to combine leading academic researchers in computing with the creative talents of Hollywood and the video game industry. Having grown to encompass over 130 faculty, staff, and students in a diverse array of fields, the ICT represents a unique interdisciplinary community brought together with a core unifying mission: advancing the state-of-the-art for creating virtual experiences so compelling that people will react as if they were real.

Reflecting the interdisciplinary nature of ICT research, we welcome applications from students in computer science, as well as many other fields, such as psychology, art/animation, interactive media, linguistics, and communications. Undergraduates will join a team of students, research staff, and faculty in one of several labs focusing on different aspects of interactive virtual experiences. In addition to participating in seminars and social events, students will also prepare a final written report and present their projects to the rest of the institute at the end of the summer.

This Research Experiences for Undergraduates (REU) site is supported by a grant from the National Science Foundation.

ICT also offers another internship program, for both undergraduate and graduate students, which requires a separate application, view a list of available positions here. For questions or additional information, please contact reu@ict.usc.edu.

Location and Housing

The ICT facility is located in the Playa Vista community of West Los Angeles, about 10 miles west of the main USC campus, and includes a 150-seat theater, game room, and gym. There are numerous restaurants and stores within walking distance, including the Westfield Culver City mall, and the beach is only a 10 minute drive away. Housing is on the main USC campus in downtown L.A., with a free shuttle between campus and the institute.

Benefits

-

- Participate in a unique multidisciplinary community that combines academic research with the creative talents of Hollywood and the video game industry.

- Work with some of the leading researchers in human-computer interaction, virtual reality, computer graphics, and virtual humans.

- Receive a total of $8,650 over the ten week program in a combination of stipend, meal, and transportation allowance.

- Receive university housing for the duration of the program.

- Travel will be reimbursed up to $600 for students living 95 miles or more outside of the Los Angeles area.

Eligibility

-

- U.S. citizenship or permanent residency is required.

- Students must be currently enrolled in an undergraduate program.

- Students must not have completed an undergraduate degree prior to the summer program.

Important Dates

-

- Applications open: January 29, 2025

- Application deadline: March 21, 2025

- Notification of acceptance begins: March 2025

- Notification of declined applicants: April 2025 (exact date TBD)

- Start Date: June 3, 2025

- End Date: August 8, 2025

How to Apply

Please visit us in December to apply for summer 2026.

Tips for filling out your application:

-

- There are two open-ended questions on the application form: a question about academic background and interests, and a personal statement where you can write additional information which you feel is relevant to your application.

- Include an unofficial transcript.

- Provide the contact information of a faculty member who will write a letter of reference, and give your recommender plenty of notice.

Research Projects

When you apply, we will ask you to rank your interests from the research projects listed below. We encourage applicants to explore each mentor’s website to learn more about the individual research activities of each lab.

Natural Language Dialogue Processing

Mentor: David Traum, Ron Artstein and Kallirroi Georgila

The Natural Language Dialogue Group at ICT is developing artificial intelligence and language technology to allow machines to participate in human-like natural dialogues with people. Our systems include virtual humans, robots, recorded real people, and voice and chat systems. An REU student will work on creating, extending or evaluating such systems; analyzing conversational data or collecting new data; or other topics using dialogue data and state-of-the-art machine learning methods. Specific projects can be chosen or defined by the REU student; examples include technology to allow an agent or robot to understand the context of a conversation, take initiative, or sustain interaction over multiple encounters; use of large language models for system response generation; and reinforcement learning of system policies. Previous REU students in the group have been lead authors and had their REU projects published in the proceedings of international scientific conferences.

AI Agents for Analyzing and Mitigating Cross-cultural Conflict

Mentor: Jonathan Gratch and Gale Lucas

Conflicts evoke emotion and often arise from differences in underlying personal and cultural values. Our research uses AI to gain insight into how culture shapes disputes, and develops methods to teach and coach conflict-resolution skills. We adopt a multidisciplinary approach that combines theories from psychology and dispute resolution with artificial intelligence methods (e.g., use of machine learning and large language models). Students will work with a large multicultural corpus of disputes. REU students are sought to assist with developing research software, experiments and data analysis. Candidates should have good programming skills. Prior experience with large-language models, including fine-tuning and reinforcement learning is a benefit. Fluency in Mandarin is also a benefit.

AI and Machine Learning for Intelligent Tutoring Systems

Mentor: Benjamin Nye

This project will explore applications of Artificial Intelligence and Machine Learning to support personalized tutoring and dialog systems such as the Personal Assistant for Life-Long Learning (PAL3) or CareerFair.ai virtual mentors (MentorPal). PAL3 is a system for delivering engaging and accessible education via mobile devices. PAL3 helps learners navigate learning resources through an embodied pedagogical agent that acts as a guide and persistent learning record to track what students have done, their level of mastery, and what they need to achieve. CareerFair.ai / MentorPal is an open source project which is developing a virtual career fair framework, where STEM mentors can self-author a virtual mentor based on their video-recorded answers. The goal of the REU internship will be to expand the repertoire of the system to further enhance learning and engagement. The specific tasks will be determined collaboratively based on research goals and student research interests. Students will be expected to contribute to a peer-reviewed publication.

Possible topics include

(1) working with Generative AI models for developing intelligent tutoring content;

(2) leveraging multimodal models in learning technologies,

(3) modifying the intelligent tutoring system and how it supports the learner, and

(4) statistical analysis, and/or data mining to identify patterns of interactions between human subjects and the intelligent tutoring system.

Real-Time Modeling and Rendering of Virtual Humans

Mentor: Yajie Zhao

The Vision and Graphics Lab at ICT pursues research and works in production to perform high-quality facial scans for Army training and simulations, as well as for VFX studios and game development companies. There has been continued research on how machine learning can be used to model 3D data effortlessly by data-driven deep learning networks rather than traditional methods. This requires large amounts of data; more than can be achieved using only raw light stage scans. We are currently working on software to aid both in the visualization of our new facial scan database and to animate and render virtual humans. To realize and test the usability of this we would need a tool that can model and render the created Avatar through a web-based GUI. The goal is a real-time, responsive web-based renderer on a client controlled by software hosted on the server. REU interns will work with lab researchers to develop a tool to visualize assets generated by the machine learning model of the rendering pipeline in a web browser using a Unity plugin and also integrate deep learning models to be called by web-based APIs. This will include the development of the latest techniques in physically-based real-time character rendering and animation. Ideally, the intern would have awareness about physically based rendering, subsurface scattering techniques, hair rendering, and 3D modeling and reconstruction.

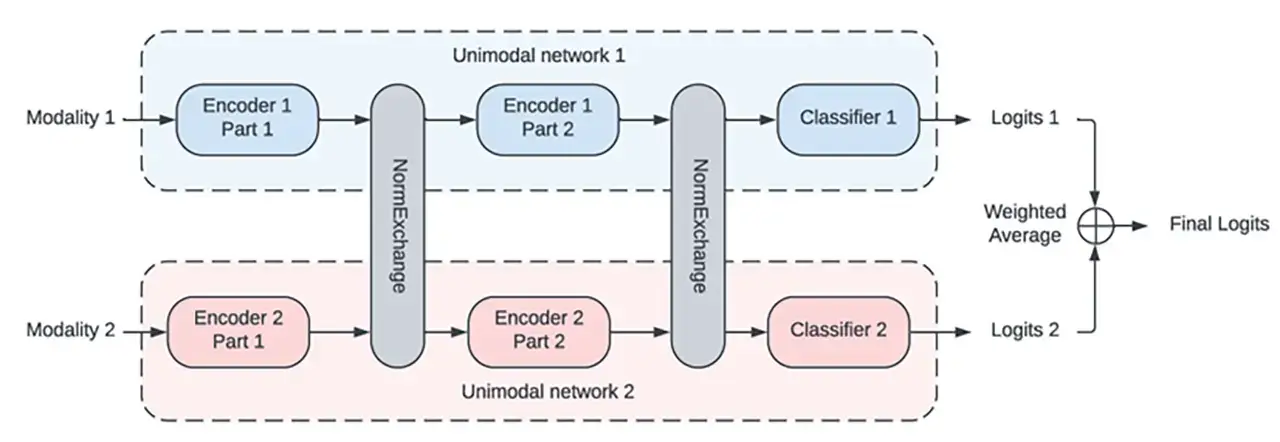

Machine Learning for Human Behavior Understanding

Mentors: Mohammad Soleymani

The Intelligent Human Perception Lab at USC’s Institute for Creative Technologies conducts research on automatic human behavior analysis. To model human emotions and behaviors, we research machine learning methods that can fuse multiple modalities and generalize to new people. We are seeking REU interns to work on related research tasks, including the following topics: multimodal LLM for behavior understanding, multimodal learning for emotion recognition and sentiment analysis and generative AI for human behavior generation. REU interns who are interested in these topics should be comfortable handling data and able to program in Python or C#. Prior experience with machine learning frameworks, e.g., PyTorch, is a plus.

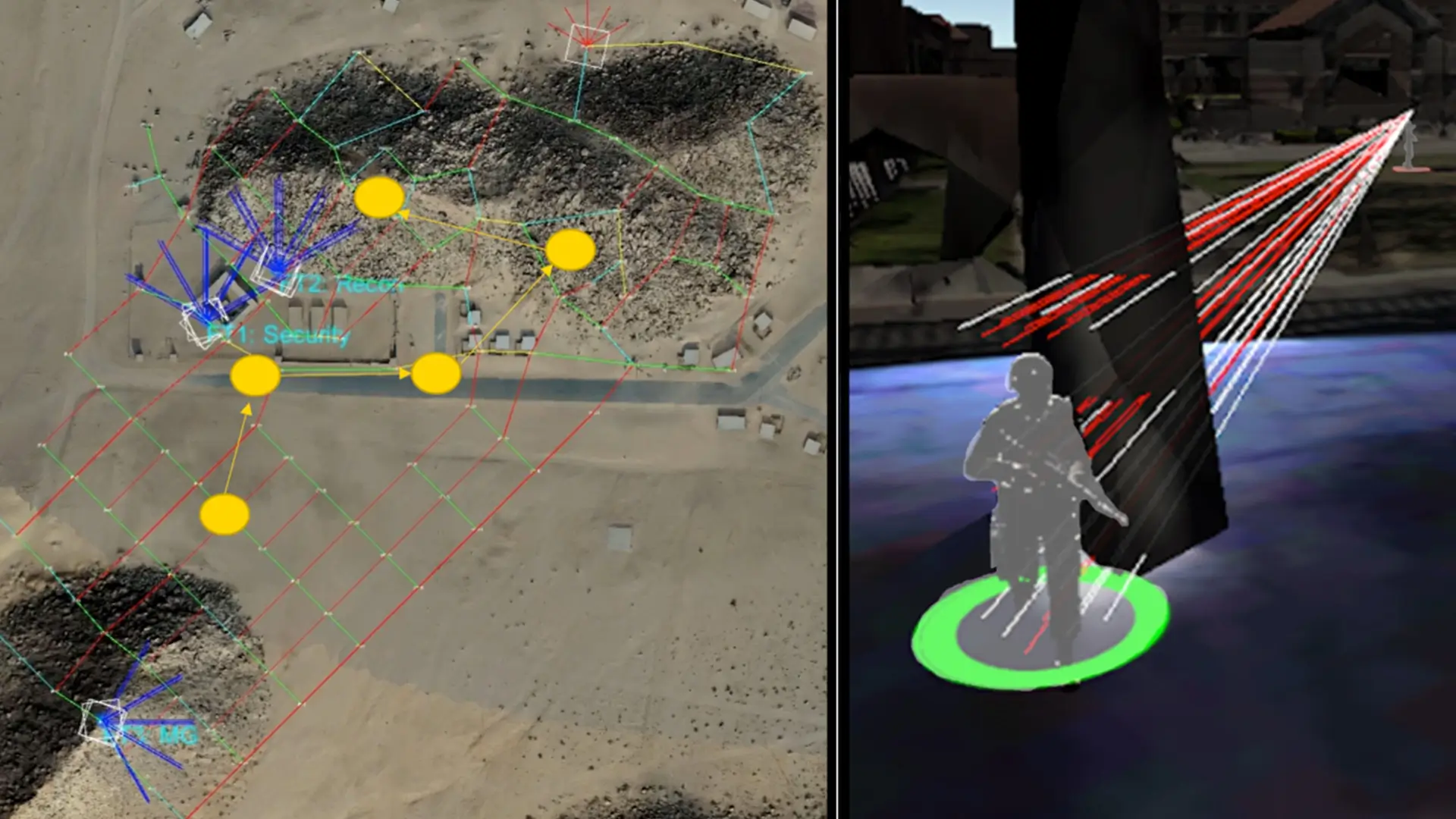

Human-inspired Adaptive Teaming Systems

Mentors: Volkan Ustun and Nikolos Gurney

Multi-agent reinforcement learning (MARL) is increasingly used in military training to build dynamic and adaptive synthetic characters for interactive simulations. The effectiveness of MARL algorithms heavily relies on the quality of the scenarios utilized in machine learning experiments. High-quality scenarios potentially result in quicker convergence, which becomes very critical in MARL experiments due to the immense computational requirements of MARL algorithms. However, creating such scenarios is not trivial. Our research addresses this challenge by building a scenario generation capability leveraging generative transformer models to generate machine-learning-friendly simulation scenarios based on effective prompts and a data bank of training content (text and images) associated with a capability. Student researchers will contribute to this research by running scenario generation experiments and assessing the value of generated scenarios in MARL.