Background

Synthetic Aperture Radar (SAR) is a remote sensing technology which is used to produce imagery of the earth’s surface from high altitude satellites and other sensor platforms, by sending radar pulses of microwaves to the surface, to generate images of the scanned area. However, SAR output images are challenging for humans to interpret due to geometric distortions, multipath reflections, and other phenomena.

Computer vision systems automatically search for relevant or important images, but rely on vast stores of accurately (human)-labeled datasets to power machine learning. Existing labeled SAR datasets are insufficient. To use and adapt computer vision with SAR imagery successfully we need a way for humans to produce an abundance of better labeled SAR images faster.

Objectives

ICT’s MxR Lab and Learning Sciences team have collaborated with ARL-West researchers to construct an alternative approach: an interactive training interface that focuses on augmented, body-based perceptual learning. By approximating the natural experiences that lead humans to build their visual understanding of 3D objects in the regular, optical world, the project hopes to enable users to build an intuitive understanding of SAR imagery.

In effect, allowing humans to “see like a satellite.”

Results

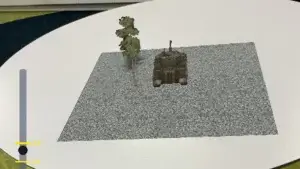

A paper describing the work was accepted for presentation at I/ITSEC 2024. The teams have created an augmented reality (AR) prototype of an interactive system that allows a user to experience different SAR viewing angles while physically moving their body to the angular positions that a SAR satellite might take above a scene.

Utilizing a mobile phone or tablet, this AR interaction enables the trainee to view a semi-realistic virtual environment scene that features significant objects (e.g., tank, helicopter, rocket-launcher) anchored to a flat surface, in an intuitive way. The phone/tablet simulates the view of an aerial sensor and the user can switch between the more realistic Electro-Optical (EO) image and the SAR image, providing a spatially accurate A/B comparison.

Next Steps

The hypothesis is that body-based interactions with 3D augmented reality generated content will yield even greater improvements in users’ ability to recognize objects in SAR and will be useful to gather critical training data.

This data is invaluable, as it will not only record the metrics of image labelers in training, but may also be used to refine machine learning algorithms aimed at boosting automated SAR image interpretation capabilities in the future.

Published academic research papers are available here. For more information Contact Us