Learning Sciences

Research Lead: Ben Nye

The ICT Learning Sciences group researches how AI can increase learning and help learners accomplish their goals. Our mission is to study and transition effective AI learning sciences research to transform the outcomes and equity of education for learners. We are also strongly focused on tools that empower instructors and content developers to modify or create content that leverages AI. We drive innovation with research projects and through two research centers: the AI Research Center of Excellence for Education (AIRCOEE) and the USC Center for Generative AI and Society.

Prior transitions from the Learning Sciences group have reached thousands of military and civilian learners. In collaboration with other ICT groups, we developed prototypes, CVIT, ELITE and UrbanSim, that reached military training programs and helped tens of thousands of learners. Another collaborative effort, the NSF Virtual Human Museum Guides project, helped hundreds of thousands of visitors learn about computer science with interactive 3D agents at the Boston Museum of Science. The CareerFair.ai project has built on these approaches to provide a virtual career fair of AI agents to learners which can be customized for any institution (e.g., the DoD Stem Virtual Mentors).

Our recent research has advanced in five major areas.

Adaptive Learning for Upskilling:

- Goal: AI recommenders and coaches to support learners throughout their careers and help them navigate career transitions successfully. Typically gaps in training lead to skill decay, due to lack of structure and motivation to continue studying.

- Approach: The Personal Assistant for Life-Long Learning (PAL3) is our primary framework, which connects learners with a variety of resources including intelligent tutoring dialogs, virtual coaches and mentors, and interactive simulations.

- Results: Systems based on PAL3 have demonstrated learning gains on domains ranging from electronics to leadership. Recent key efforts include the Navy SAFER mobile app for suicide prevention training, the web-based Air Force Proficiency-Based Sexual Assault Training (PB-SAT), and the hybrid web/mobile app (Game-If-AI) for upskilling artificial intelligence skills for civilian and military learners.

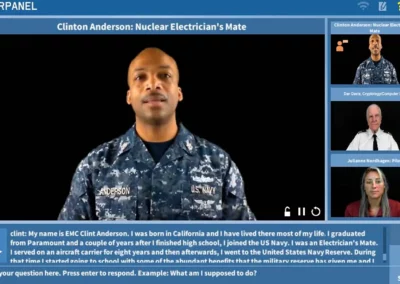

Virtual Mentoring at Scale:

- Goal: Amplify knowledge and experience of a broad set of mentors, through a framework where they can build an interactive AI virtual mentor by video recording their answers to questions (no specialized technical skills needed).

- Approach: The MentorPal platform enables mentors to self-record videos answering common questions, which are processed to build a question-answering virtual mentor. A mentor or a panel of mentors offers a personalized conversation, by responding to learner questions with videos that best answer these questions.

- Results: In the NDEP CareerFair.ai project, both college and high school students reported that the virtual mentors helped them understand career topics they needed to know and 96% reported they would recommend it to others.

Intelligent Tutoring Frameworks:

- Goal: Advance the state of the art in AI-based tutorial dialog systems such that a standard instructor can easily author or modify them.

- Approach: The OpenTutor project has developed a reusable dialog system that allows an instructor to create their first tutor in under an hour. This research leverages traditional NLP and Generative AI models to understand student answers with limited examples. We are also researching techniques to generate reliable conversational AI models using generative AI and classroom readings or videos.

- Results: In the ElectronixTutor (Navy) project, joint work with the University of Memphis, we evaluated OpenTutor’s predecessor AutoTutor in a large-scale pilot study on Navy Nuclear Power Fundamental Electronics skills, and found statistically significant learning gains versus baseline classes. In the Advancing Teachers Proportional Reasoning (IES) project, we tutored math professional development using a virtual human facilitator who combined conversation with virtual manipulatives such as interactive graphs, calculators, and tables. In the COPE project (Army MOMRP), we conducted a randomized study of sensitive/subjective topics compared to technical topics, and found that sensitive tutoring styles offered benefits on sensitive topics compared to traditional tutoring dialogs.

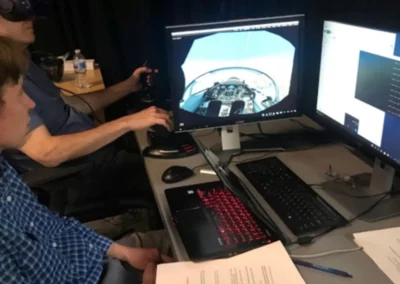

Engagement Data Mining:

- Goal: Applying machine learning to measure and increase engagement in computer-based learning in contexts ranging from traditional online courseware to VR flight training.

- Approach: Process available data sources including facial video, interaction logs and surveys using machine learning to detect and respond to engagement patterns.

- Results: ENGAGE (Army): Multi-modal analysis of engagement based on facial video feeds, behavioral logs, and self-report surveys. We developed Reinforcement Learning models to increase learning through coaching. SMART-E (Army): Semi-supervised machine learning to classify different types of engagement/disengagement, by leveraging unlabeled training data plus a small number of play-testers who act out engagement types. We demonstrated that this general-purpose approach works for traditional online courseware as well as scenario-based training. OMEGA (AFRL): Machine learning metrics to detect engagement and distraction during Pilot Training Next (PTN) flight simulations. Based on these metrics, we developed and evaluated a recommender for instructor interventions. This work was a collaboration with Eduworks and won the I/ITSEC 2021 Best Paper award for Training.

Social Learning in Augmented Reality:

- Goal: Design and evaluate AR technologies that enable learning in-situ (e.g. on the job) and with other learners, where the technology helps learners discuss and connect with each other (as opposed to being separated by a screen).

- Approach: Exploratory research focusing on design principles and lessons learned for AR that encourage hands-on learning and small-group learning (e.g., discussions).

Results: Tar AR (NSF) enables learners to experience and reconstruct virtual simulations of prehistoric environments on-site at La Brea Tar Pits. Evaluation studies showed strong learning gains across all AR conditions, as well as indications that phone AR without headsets can still be highly effective. We also have other ongoing collaborations with ICT’s Mixed Reality group on adaptive maintenance training with AR, adaptive head mounted display interfaces (HMDi), and visual abstractions for synthetic training environments (VAST).