Background

The OpenMG 2.0 effort seeks to advance the ability to use naturalistic hand motions to control virtual and mixed reality simulations with specific functionality for medical and surgical interactions. The OpenMG SDK framework is open source and device independent, allowing a variety of 3D optical and wearable sensors to be used for these interactions. OpenMG 2.0 advances prior AI-based gesture recognition efforts to create a robust and naturalistic interaction scheme.

Objectives

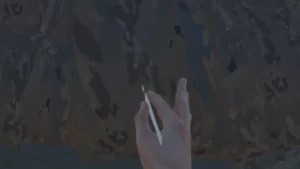

OpenMG seeks to make virtual experiences more naturalistic and easy to interact with and can employ any 3D camera or sensor glove from the present or future. With this, it is possible to touch, twist, move, throw, press and use tools in virtual and mixed reality training. Using Smart Object technology, medical tools, devices and other virtual instruments can be used in a similar fashion to real world objects. Our models are designed to do more than interpret hand position and gestures; we intend to interpret user intent and assist the user in the tasks they are attempting.

Results

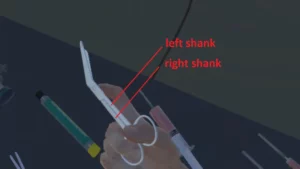

We have successfully created a medical test environment where it is possible to employ medical tools such as scissors, bandages, syringes, scopes, scalpels and trocars – items common for medical training. We’ve created technology that simplifies natural empty hand interactions with these objects. We’ve also demonstrated the natural qualities of our physics models with a VR baseball toss scenario with a virtual character.

Next Steps

We are currently working to transition OpenMG by integrating this tool with three military medical simulations: mass casualty triage scenario, mass casualty combat medical simulator, and a surgical simulator.

Published academic research papers are available here. For more information Contact Us