BYLINE: Rong Liu, Dylan Sun, Dr. Meida Chen, and Dr. Andrew Feng

A New Paradigm in Real-Time Radiance Field Rendering

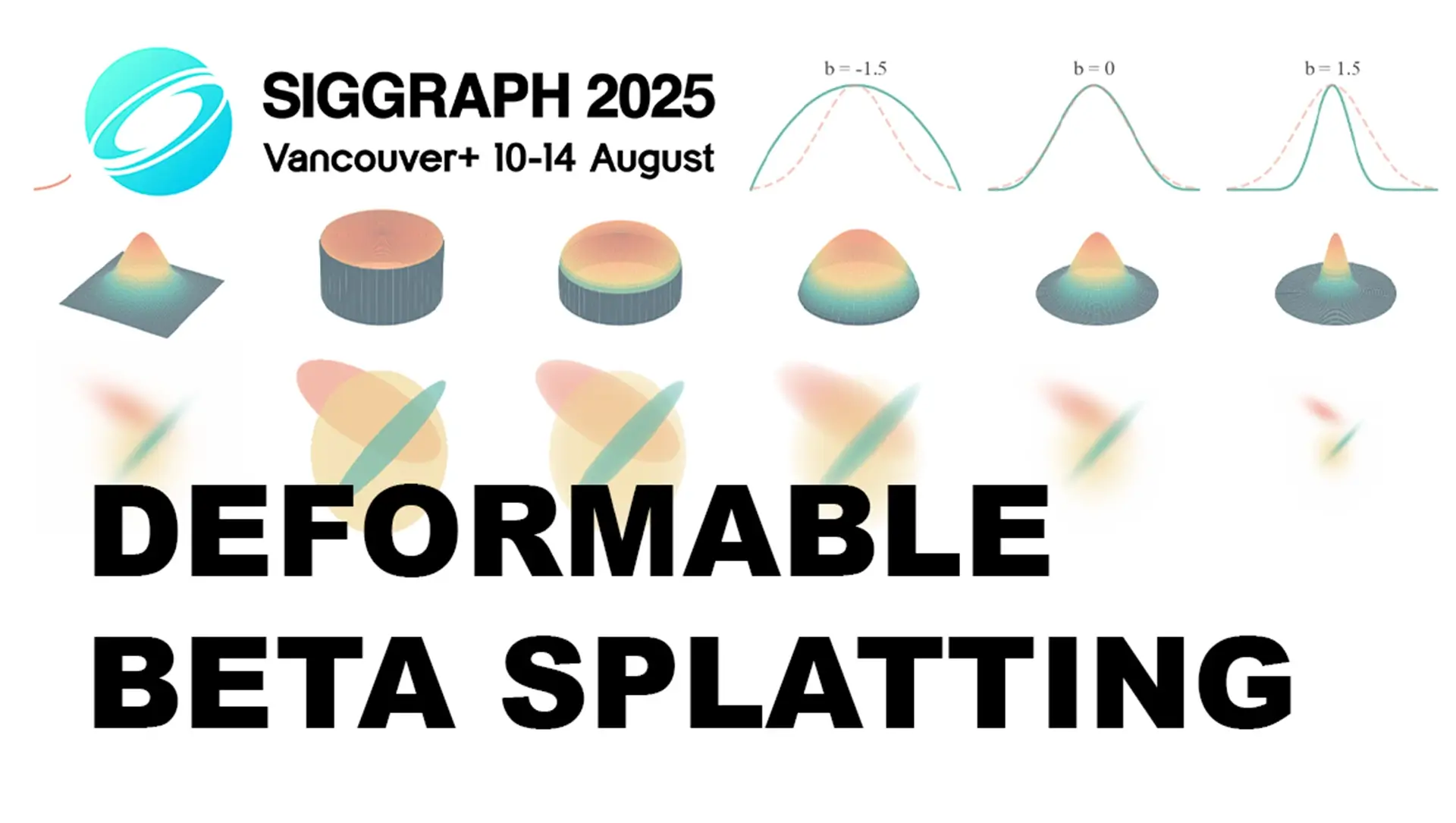

We are pleased to share that our paper, Deformable Beta Splatting (Rong Liu, Dylan Sun, Meida Chen, Yue Wang, and Andrew Feng), has been accepted for presentation at SIGGRAPH 2025, to be held in Vancouver, Canada (August 10–14). This work introduces a novel rendering paradigm that redefines the capabilities of neural representations in high-fidelity 3D scene synthesis.

From Gaussian to Beta: Redesigning the Rendering Primitive

While 3D Gaussian Splatting (3DGS) has advanced real-time radiance field rendering, its fixed Gaussian kernels and low-order Spherical Harmonic colour encoding impose inherent limitations in geometric precision and expressive detail. Our method, Deformable Beta Splatting (DBS), introduces a fundamentally different approach.

DBS replaces Gaussians with deformable Beta kernels—a bounded, frequency-adaptive alternative that offers sharper control over shape, density, and locality. This flexibility enables DBS to more accurately capture high-frequency scene structures, such as fine surface reliefs and edges, while significantly reducing computational overhead. In empirical evaluations, DBS achieves superior visual quality with only 45% of the parameters required by comparable 3DGS methods, and renders at 1.5 times the speed.

Unified Representation of Colour and Illumination

Extending the Beta kernel into the radiometric domain, we propose Spherical Beta, a generalised colour encoding model that consolidates diffuse and specular components into a unified framework. Spherical Beta directly learns bounded specular lobes without requiring explicit normals or lighting direction, thereby streamlining the rendering pipeline and enhancing physical plausibility.

This modelling approach departs from traditional spherical harmonics and introduces new capabilities for view-dependent rendering—delivering photorealistic highlights through learnable, compact representations.

Frequency-Aware Geometry Decomposition

One of the defining features of DBS lies in its capacity for geometry-aware decomposition. The kernel’s shape parameter intrinsically controls the spatial frequency of each primitive, allowing for principled separation of coarse and fine features. This enables operations such as frequency masking, progressive refinement, and scene editing to be executed with analytical clarity.

This structural control extends to the lighting domain as well. By modulating diffuse and specular channels within the Spherical Beta model, the system affords selective isolation and manipulation of light–matter interactions, supporting new workflows for stylised rendering.

Generalising Densification: A Kernel-Agnostic Solution

A critical challenge in radiance field learning is the effective densification of primitives. Previous methods have been tightly coupled to Gaussian properties, rendering them incompatible with alternative kernels. Our work introduces a mathematically grounded solution: Kernel-Agnostic MCMC.

We demonstrate that by regularising opacity alone, densification can remain distribution-preserving under Markov Chain Monte Carlo sampling, independent of the underlying kernel type and the number of densification. This theoretical advance significantly broadens the applicability of splatting-based techniques.

Surpassing the State-of-the-Art

Deformable Beta Splatting achieves state-of-the-art visual quality with fewer parameters, reduced storage memory, and faster rendering speeds, establishing a new benchmark for real-time scene reconstruction and interactive rendering. This points toward a post-NeRF future built on richer, more expressive primitives.

We look forward to sharing this work with the broader SIGGRAPH community and engaging in dialogue around the future of real-time neural rendering.

Author Bios

Rong Liu is a Research Engineer in the Geospatial Lab at the USC Institute for Creative Technologies, focusing on the intersection of Computer Vision, Computer Graphics, and Machine Learning.

Dylan Sun is a student researcher in the Geospatial Lab at the USC Institute for Creative Technologies. He is currently researching Computer Graphics and Machine Learning, with an interest in concept art and game design.

Dr. Meida Chen received his PhD. from the Department of Civil and Environmental Engineering, University of Southern California in 2020, following his MS, Computer Science and MCM, Construction Management (Civil Engineering), also from USC. He joined USC Institute for Creative Technologies as a Research Assistant in 2017, and received two promotions: Research Associate (2020), then Senior Research Associate (2022).

Dr. Andrew Feng, Associate Director of Geospatial Research at the Institute for Creative Technologies, leads the Geospatial Terrain Research group focusing on geospatial R&D initiatives in support of the Army’s One World Terrain project. He joined ICT in 2011 as a Research Programmer specializing in computer graphics, then as a Research Associate focusing on 3D avatar generation and motion synthesis, before becoming a Research Computer Scientist within the Geospatial Terrain Research group. He received his Ph.D. and M.S. degree in computer science from the University of Illinois at Urbana-Champaign.

//