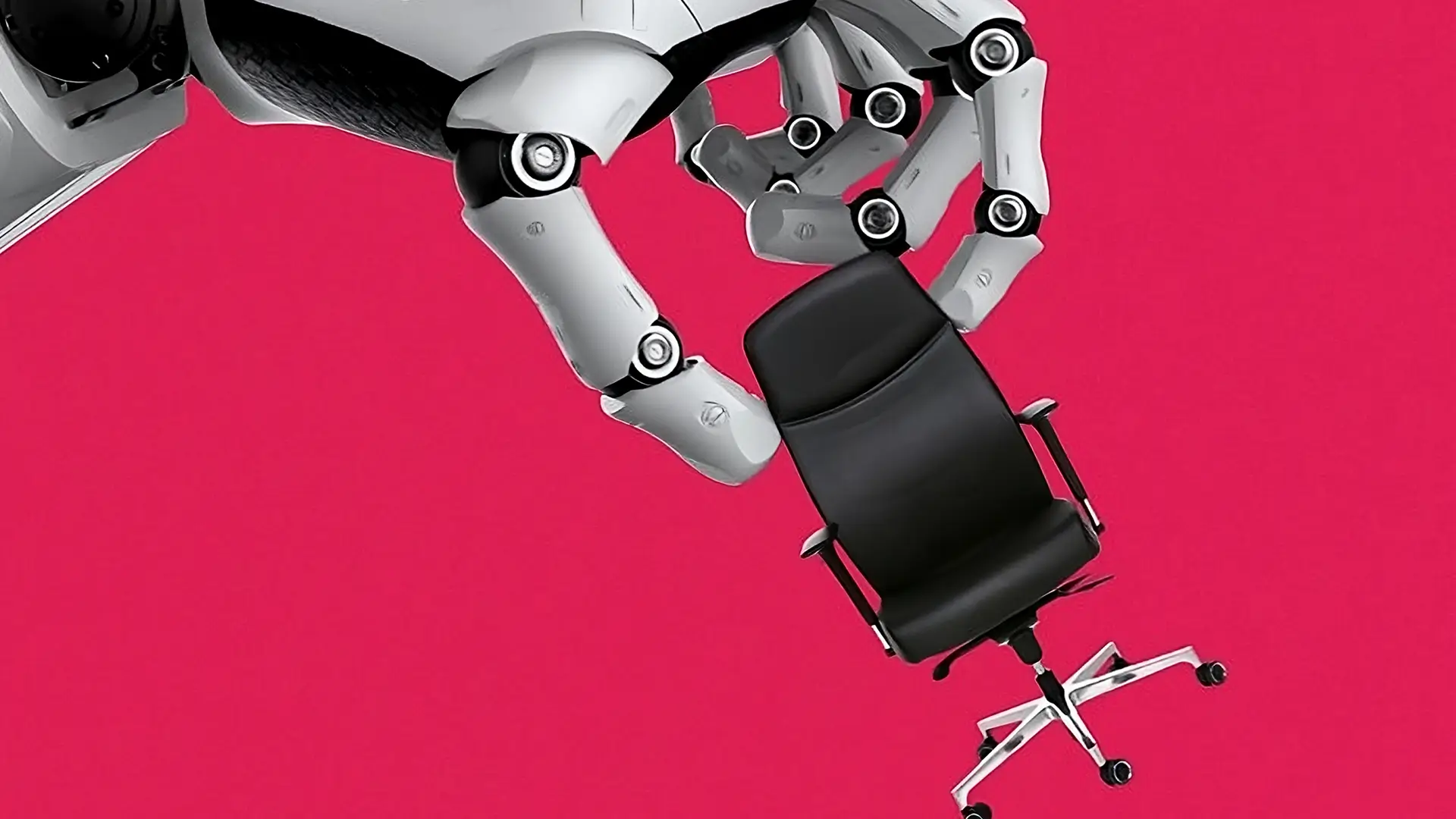

We are already seeing the rise of digital assistants that speak with a human voice and can use human appearance and social intelligence to negotiate disputes, brainstorm business strategies or conduct interviews. But our research illustrates that people may act less ethically when collaborating via AI.

Traditionally, teammates establish emotional bonds, show concern for each other’s goals and call out their colleagues for transgressions. But these social checks on ethical behavior weaken when people interact indirectly through virtual assistants. Instead, interactions become more transactional and self-interested.

For instance, in typical face-to-face negotiations, most people follow norms of fairness and politeness. They feel guilt when taking advantage of their partner. But the dynamic changes when people use an AI to craft responses and strategies: In these situations, we found, people are more likely to instruct an AI assistant to use deception and emotional manipulation to extract unfair deals when negotiating on their behalf.

Understanding these ethics risks will become an active focus of business policy and AI research.

—Jonathan Gratch, professor of computer science, University of Southern California