The Geospatial Research Lab at the USC Institute for Creative Technologies (ICT) will present its latest technical paper tomorrow at SIGGRAPH 2025, the premier conference and exhibition on computer graphics and interactive techniques.

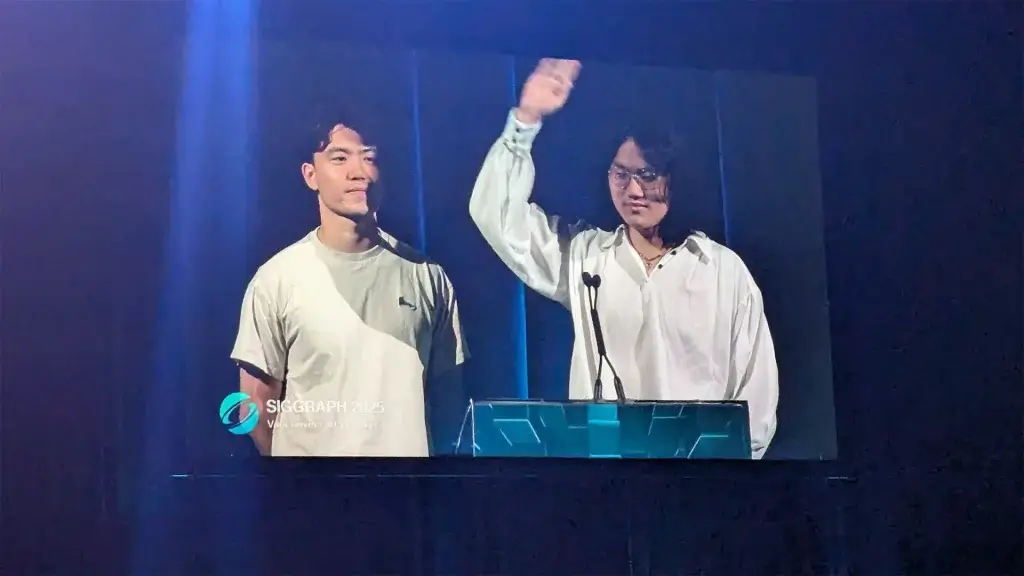

[L – R: Rong Liu and Dylan Sun]

The paper, Deformable Beta Splatting (DBS), will be featured in the “Splatting Bigger, Faster, and Adaptive” session on Wednesday, August 13, from 10:45 a.m. to 12:35 p.m. PDT in Rooms 301–305 of the West Building. The team’s presentation is scheduled for 11:35–11:45 a.m. PDT. Full session details are available in the SIGGRAPH 2025 conference schedule.

Authored by Rong Liu, Dylan Sun, Meida Chen, Yue Wang, and Dr. Andrew Feng, DBS introduces a new approach for real-time radiance field rendering. The method uses deformable Beta Kernels with adaptive frequency control for both geometry and color encoding. This enables accurate capture of complex geometries and lighting while using 45 percent fewer parameters and rendering 1.5 times faster than the current benchmark, 3DGS-MCMC.

By achieving high fidelity with greater computational efficiency, DBS offers clear advantages for large-scale, real-time visualization—an area of increasing importance for simulations and virtual environments.

About the Geospatial Research Lab

Led by Associate Director Dr. Andrew Feng, ICT’s Geospatial Research Lab specializes in high-resolution 3D terrain construction for next-generation simulations. The team combines commercial photogrammetric tools with its own research and development pipeline, using drone platforms and electro-optical sensors to capture and process terrain data.

Their work supports the U.S. Army’s Simulation and Training Technology Center (STTC) and plays an integral role in the Army’s One World Server (OWS) system. ICT’s algorithms process low-altitude UAV collections into simulation-ready terrain used for applications such as line-of-sight analysis, pathfinding, and concealment assessment.

Recent research includes neural 3D terrain reconstructions for large-scale environments and semantic segmentation using zero-shot AI models. Looking ahead, the team aims to refine photogrammetry processes and apply neural rendering to achieve photorealistic reconstructions of geospatial terrain.

Showcasing Innovation

The SIGGRAPH presentation highlights how rendering innovations like Deformable Beta Splatting can support the lab’s broader mission: producing accurate, efficient, and scalable visualizations for operationally relevant simulations.

//

![GeospatialTeamSiggraph2025_2 copy [L - R] Dylan Sun, Dr. Andrew Feng, Rong Liu]](https://ict.usc.edu/wp-content/uploads/2025/08/GeospatialTeamSiggraph2025_2-copy.webp)