By Dr Ning Wang, Director, Human-Centered AI Lab, USC ICT

Dr. Ning Wang is the Director of the Human-Centered AI Lab and Research Associate Professor, Department of Computer Science, USC Viterbi School of Engineering. Dr. Wang graduated from Hunan University with a B.S. in Computer Science and Technology, received her M.S. in Computer Science from the University of Southern California (USC), and was awarded her PhD on computational social intelligence in pedagogical agents from the USC Information Sciences Institute (ISI).

Artificial intelligence (AI) is profoundly reshaping the way we live, work, and learn. As AI becomes increasingly ubiquitous, I believe it is essential to prepare our current workforce and younger generations to critically and productively engage with this transformative technology that will change how we problem-solve and make decisions.

Through my work as the Director of the Human-Centered AI Lab at the Institute for Creative Technologies (ICT), and as a Research Associate Professor in the Department of Computer Science at the USC Viterbi School of Engineering, I have dedicated my career to advancing AI research and education for service members, as well as K-12 students.

The Human-Centered AI Lab: Bridging AI and Society

At the Human-Centered AI Lab, my team and I focus on fostering collaboration between humans and AI, exploring ethical and societal implications, advancing AI education, and promoting trust calibration in AI systems. As human-AI collaboration is the future of work, it’s crucial to design AI that understands us and how to work with us.

The best place to begin, in my view, is to look at how we successfully work with others and how AI can emulate us. Take education for example: how should we translate our own behaviors, particularly those from teachers and tutors, to virtual teachers and tutors? Our research particularly focuses on developing social intelligence for AI. For example, what tactics do expert human tutors use when they inevitably have to criticize a student and instruct them on what to do next, without negatively impacting the student’s motivation? What creates that sparkle when a charismatic teacher gives a lecture? What should an AI listener do to encourage a student struggling to explain a difficult concept? Our series of investigations highlighted how people, knowing they are interacting with an AI, a machine, inevitably behave as if they are interacting with another person, if the AI exhibits socially intelligent behavior.

There is intriguing research from my mentor and colleague, Jonathan Gratch, indicating that AI may occupy a sweet spot between human and machine that allows us to harvest the benefit of both human-human interaction and human-machine interaction.

In addition to embodied AI, our lab is also part of the explainable AI movement, begun almost a decade ago, to investigate trust within human-AI teaming. Our mission is to unpack the AI “black box” and enable AI’s human partners in problem-solving to make informed decisions on when to adopt and when to overwrite an AI’s decision. In collaboration with researchers in the Army Research Laboratory, we have explored human-AI teaming for reconnaissance, search-and-rescue, and cybersecurity.

Our research addresses the challenge in explaining algorithms that are interpretable but too complex for AI’s human partners to understand, with the goal of facilitating unbiased human decision making. Our research has uncovered just how much an AI who can explain and justify its decisions can influence its human teammates. Our objective, however, is not to foster blind trust in AI, but to develop a calibrated level of trust in AI. After all, AI will inevitably make mistakes.

Developing AI Literacy for Current and Future Workforces

Improving AI is only half the equation in human-AI interaction. Our understanding of AI’s inner workings can potentially influence our decisions to team up with AI. In 2018, my lab was part of a nation-wide National Science Foundation initiated pioneering effort toward AI education outside higher education. This includes both K-12 education as well as an AI-ready workforce within the military.

For example, within the military, it is crucial to ensure that service members comprehend the fundamentals of how an AI-based system works—not just how to use it. This will be key to enabling the success of AI tools in tactical and non-tactical applications.

Some of our key projects highlight our commitment to innovation:

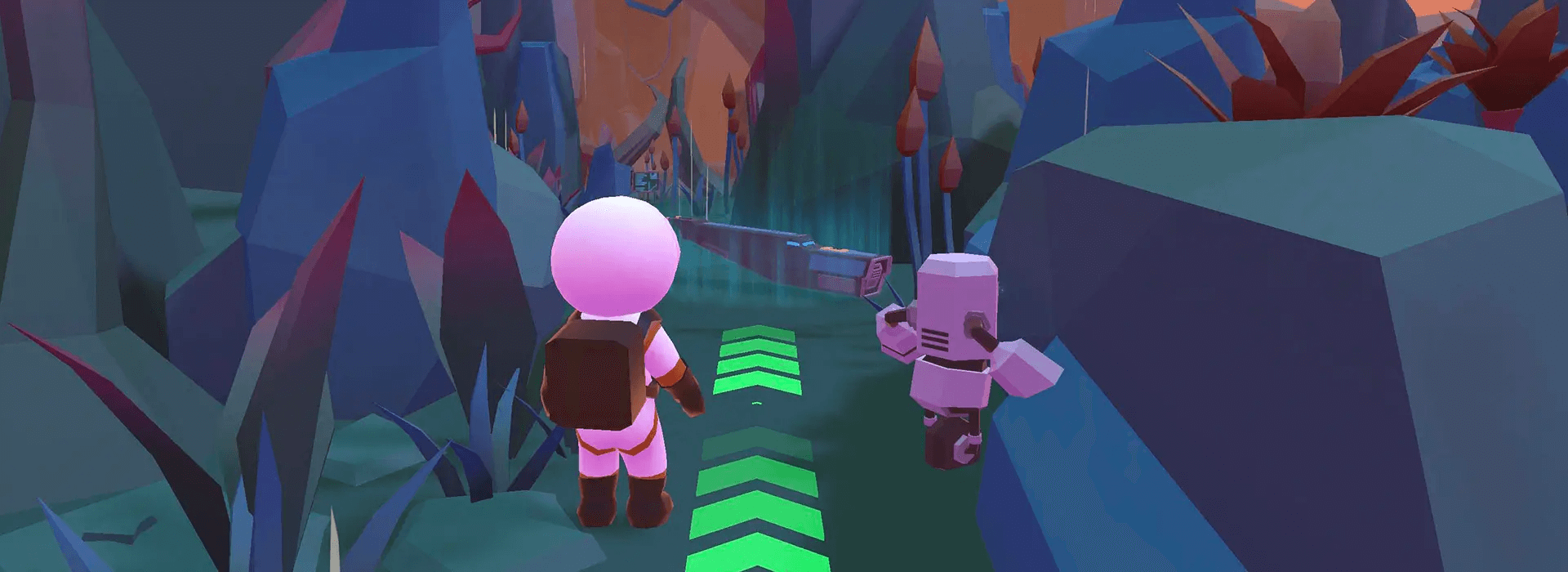

- Building Basic AI Competency for All This initiative centers on trust in AI through education. A better understanding of the principles and concepts of AI and data science has the potential to improve the adoption of AI in both the military and civilian workforce now and for the future. Through an immersive 3D role-playing game, called Becoming Fei (formally Age of AI), the project simulates and takes abstract AI and data science concepts and makes them concrete and accessible to novice learners. The project is supported by the Army Research Laboratory. The game is accessible through a web browser and will be made freely available for the public via the project’s website.

- AI Behind Virtual Humans Supported by the National Science Foundation (NSF), this project develops an interactive exhibit to introduce state-of-the-art AI behind a virtual human to young children and their caregivers. The exhibit includes a suite of AI-driven activities that invites visitors to experience, learn, and reflect on the AI that’s all around us. Children, given their developing understanding of human cognition, often face challenges in conceptualizing machine and artificial intelligence. To address this challenge, we developed a strategy called metacognitive embodiment in the design of the exhibit activities. This approach encourages children to reflect on their own intelligent performance on tasks, then compare their mental models to AI systems designed to complete similar tasks. The exhibit is open to the public from November 2023 to May 2025 at the Lawrence Hall of Science.

- Problem-Solving with Probabilistic AI In this NSF-funded project, we developed an educational game, The 7th Patient, to help high school students learn about everyday probability and its use in probabilistic AI, such as Bayesian networks. The game guides students through a series of scenario-based problem-solving, from making medical diagnoses to diagnosing enemy weaknesses. Following a successful pilot with over 1,000 students, the game’s full release is planned for Q2 2025.

- Explainable AI for Education My team and I created ARIN-561, a game designed to help high school students understand AI through engaging gameplay. By integrating gameplay data, surveys, and pre/post assessments, we have demonstrated how interactive experiences like ARIN-561 can effectively advance AI literacy. Supported by the National Science Foundation, the game has received multiple accolades, including a Student Choice Award at the I/ITSEC Serious Games Summit.

The Future of AI Learning Experiences

As the field continues to evolve, I believe that creating relatable AI learning experiences is a critical strategy for positioning future generations for success in an AI-driven world.

For decades, researchers have explored using AI to help students learn science, math, reading, and writing. Perhaps it’s now time for AI to help us learn about AI.

As AI is increasingly integrated in every aspect of our work, study, and life, AI learning is taking place outside the classroom, through just-in-time explanations, and through our own experience and experimentation with data fed to AI. What we do learn about AI is less about the algorithms and parameters, but more about how we accomplish tasks in work and life with AI, what it can and can’t do, what it shouldn’t be used to do.

Through our innovative projects and groundbreaking research at the Human-Centered AI Lab, we are paving the way for a more informed AI future, equipping young minds and current workforces with the tools to navigate and shape the technologies of today and tomorrow.

//