BYLINE: Dr. Andrew Feng, Associate Director, Geospatial Terrain Lab, ICT; Research Assistant Professor, Computer Science, Viterbi School of Engineering

Last month, Butian Xiong, and Mallika Gummuluri, two of my Research Assistants in the Geospatial Terrain Lab at ICT, presented their research at the GEOINT 2025 Symposium in St. Louis, Missouri.

Mallika Gummuluri has recently graduated with a Master’s in Spatial Data Science, from USC Spatial Sciences Institute, and her research focus is on utilizing deep learning for real-time object detection in high-altitude drone imagery.

Butian Xiong is working towards his Master’s in Computer Science at USC Viterbi School of Engineering.

Automated Ground Control Point Detection for Enhanced 3D Geospatial Modeling]

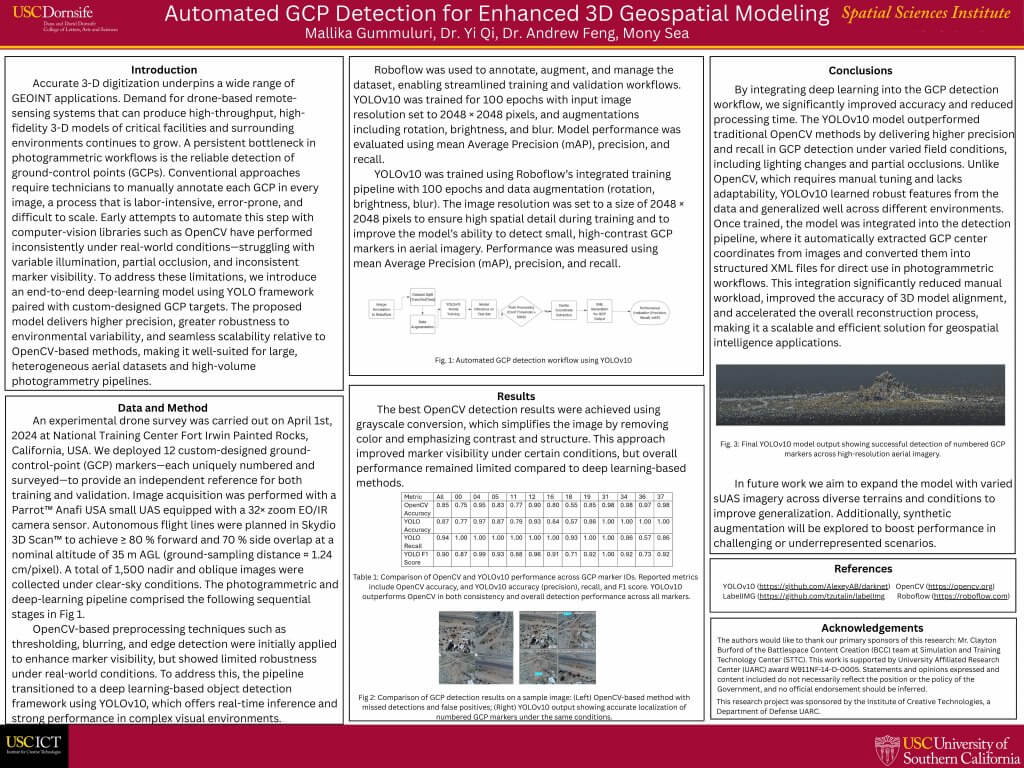

Mallika Gummuluri’s research poster “Automated Ground Control Point Detection for Enhanced 3D Geospatial Modeling” (Mallika Gummuluri, Dr. Yi Qi, Dr. Andrew Feng, Mony Sea), demonstrated that accurate 3D digitization is foundational for geospatial intelligence (GEOINT). Particularly in the context of drone-based remote sensing.

However, a major bottleneck in photogrammetric workflows is the detection of ground-control points (GCPs), which are essential for accurate georeferencing and model alignment. Traditional methods rely on manual annotation of GCPs in every image—a time-consuming and error-prone process that hampers scalability.

Early automation attempts using conventional computer vision tools like OpenCV struggled with issues such as inconsistent lighting, partial occlusion, and variable marker visibility in real-world environments.

To address these limitations, our research introduces a deep-learning-based solution using the YOLOv10 object detection framework paired with custom-designed GCP markers. The proposed approach aims to increase detection accuracy, robustness, and scalability across diverse aerial datasets and operational conditions.

An experimental drone survey was conducted on April 1, 2024, at the National Training Center in Fort Irwin, California. The team deployed 12 uniquely numbered and surveyed GCP markers and collected 1,500 high-resolution nadir and oblique images using a Parrot Anafi USA drone equipped with a 32× zoom EO/IR sensor. Flights were autonomously planned using Skydio 3D Scan™ software to maintain high image overlap, and data were captured under clear-sky conditions at approximately 1.24 cm/pixel ground resolution.

Initially, OpenCV techniques such as thresholding and edge detection were applied to the imagery. While grayscale conversion modestly improved detection under some conditions, the overall performance of these conventional methods remained inadequate.

The pipeline was then transitioned to a YOLOv10-based model, trained using Roboflow with extensive data augmentation (rotation, brightness, blur) to improve generalization. The model was trained over 100 epochs on high-resolution 2048 × 2048 pixel images, allowing it to detect small, high-contrast markers effectively. Evaluation metrics included mean Average Precision (mAP), precision, recall, and F1 score.

Results showed that YOLOv10 significantly outperformed OpenCV in both detection accuracy and consistency. It demonstrated high precision and recall across all 12 GCP markers, even in challenging visual environments. Once trained, the YOLOv10 model was integrated into an automated pipeline that extracted GCP coordinates and formatted them into XML files, ready for direct use in photogrammetric reconstruction software. This end-to-end integration reduced manual labor, improved 3D model accuracy, and accelerated overall processing time.

Mallika Gummuluri concluded that YOLOv10 provides a scalable, efficient, and robust solution for GCP detection in high-volume photogrammetry workflows. Looking ahead, they plan to enhance model generalization through expanded datasets from varied terrains and synthetic data augmentation.

This research originated within the Geospatial Terrain Lab at ICT and is supported by the U.S. Army’s Simulation and Training Technology Center. We believe it represents a significant advancement in the automation of geospatial workflows, with practical applications in defense, urban planning, and infrastructure monitoring.

Advancing Large-Scale Scene Reconstruction with TripleS-Gaussian

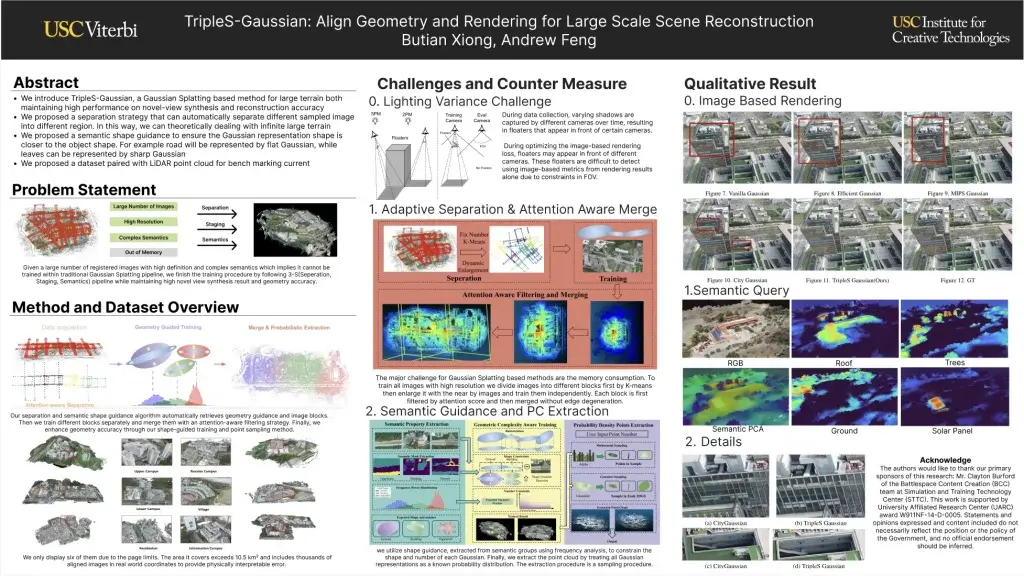

Butian Xiong showcased our recent work titled “TripleS-Gaussian: Align Geometry and Rendering for Large Scale Scene Reconstruction”—a project he co-authored with me as his advisor.

This project introduces a new method for reconstructing large outdoor environments in 3D using a technique called TripleS-Gaussian, which stands for Separation, Staging, and Semantics. In simple terms, we are addressing one of the biggest problems in this area: how to reconstruct very large scenes both accurately and efficiently using photographic images and LiDAR (laser-based depth sensing).

Existing methods in 3D reconstruction often struggle with either rendering quality (how realistic the images look from new viewpoints) or with geometric accuracy (how close the 3D model is to the real-world shape). Our goal was to improve both—without requiring high-end computing resources.

The Key Contributions of Our Work

There are four main innovations behind our approach as illustrated in the above mentioned poster:

- A Large-Scale Dataset: We built a dataset covering over 10.5 square kilometers, collected using drone imagery and dense LiDAR point clouds. This scale is significantly larger than what previous datasets offered. Importantly, our data are aligned using global coordinates (WGS84), allowing us to measure reconstruction errors in real-world units like meters.

- Separation Strategy: To deal with GPU memory limits, we introduced a smart partitioning method. Using an attention-aware clustering algorithm, we divide the full scene into manageable chunks that can be processed independently. This separation ensures smooth transitions between chunks and allows training to scale across large scenes without crashing the system.

- Semantic Shape Guidance: We used semantic information—like identifying roads, buildings, or trees in an image—to help shape the 3D representation. For example, a road is represented with a flat shape, while leaves or small structures might have sharper or more detailed representations. This step ensures that the geometry of our reconstruction aligns with what the object actually is.

- Staged Training: Instead of trying to optimize everything at once, we train in stages. First, we focus on rendering quality to get visually appealing outputs. Then, we refine the geometry using semantic constraints and remove irrelevant data points. This two-step approach helps us achieve strong results in both image-based and geometry-based evaluations.

Why This Matters

3D reconstruction is becoming essential in many fields—from urban planning and environmental monitoring to virtual reality and military simulations. At ICT, we’re particularly interested in developing scalable technologies for real-world applications. Whether it’s modeling a city after a natural disaster or simulating environments for training, accurate large-scale reconstructions are critical.

Previous methods like Structure from Motion (SfM) and Neural Radiance Fields (NeRF) have made significant progress. However, SfM struggles with noisy data in large areas, and NeRF requires dense camera coverage and expensive computation. More recent methods like 3D Gaussian Splatting (3DGS) offer faster training and rendering but often compromise on memory usage and geometric accuracy. TripleS-Gaussian helps bridge this gap by making it possible to train large models efficiently while maintaining high-quality geometry.

Reflections on GEOINT and What’s Next

Presenting this work at GEOINT was an inspiring experience for our research assistants, giving them not only practice in talking about their findings, but also providing an opportunity to speak with researchers and professionals from national labs, startups, and federal agencies. Many conference attendees, who stopped by our work, were interested in how our method could be adapted for use in emergency response, defense simulations, and infrastructure monitoring, and expressed appreciation for the semantic-guided approach, which could make reconstructions more interpretable and useful in decision-making contexts.

The feedback we received also highlighted areas for improvement. One of the biggest challenges remains the “floaters” in Gaussian Splatting—transparent or semi-transparent points that affect the final geometry. We plan to explore better filtering and mesh extraction techniques that go beyond point clouds. This could make the results more reliable in applications where geometry really matters, like robotics or autonomous navigation.

Moving forward, we are also working on improving the interpretability of these models. For example, if a reconstruction system knows that it’s looking at a tree, road, or building, it could provide more detailed, category-specific outputs. This would help bring together the worlds of computer vision, geospatial analysis, and human-centered simulation.

Final Thoughts

Attending GEOINT 2025 was more than just an opportunity for our two research assistants to present their findings—it was also a chance to connect with a vibrant and growing community of researchers and practitioners.

We look forward to continuing to support their academic work, as we move towards making large-scale scene reconstruction more scalable, semantically rich, and grounded in real-world data.

//