Background

AI will continue to profoundly impact society around the globe, and it is therefore vital to prepare youth – our future workforce – with a fundamental knowledge of AI. Museums and other informal spaces offer open and interactive opportunities for the public to engage with and learn about AI. As AI is arguably the science of building intelligence that thinks and acts like humans, Virtual Humans provide an ideal vehicle to illustrate many fields of AI.

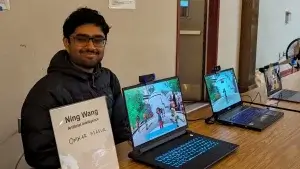

To this end, a multidisciplinary team of researchers, led by Dr. Ning Wang, Research Associate Professor of computer science, USC Viterbi, and Director of the Human-Centered AI Lab at ICT, together with the Lawrence Hall of Science and TERC, a non-profit providing innovative solutions for STEM education, developed a Virtual Human exhibit. Drawing on expertise in AI, learning design, and assessment from ICT, the exhibit provides a collaborative learning experience and engages visitors through structured conversations with a Virtual Human, while showcasing how AI drives the VH’s behavior behind the scenes.

The exhibition opened at the Lawrence Hall of Science, Berkeley, California in Nov 2023. It was scheduled to close in May 2024. But due to its overwhelming popularity, the exhibit will now open until spring 2026!

Objectives

The Virtual Human exhibit investigated three research questions:

- How can a museum experience engage visitors in collaborative learning about AI?

- How can complex AI concepts underlying the Virtual Human be communicated in a way that is understandable by the general public?

- How does the Virtual Human exhibit (and to what extent) increase knowledge and reduce misconceptions about AI?

To answer the questions above, the output leveraged evidence-based research in Computer Supported Collaborative Learning, with existing conversational Virtual Human technology developed through decades of collaborative research in AI, including machine vision, natural language processing, automated reasoning, character animation, and machine learning.

Results

The current iteration of the installation includes a number of interaction stations, with a digital activity and accompanying (unplugged) activity to facilitate the understanding of AI together with the digital counterpart. The activities have now been piloted in a series of studies with thousands of visitors at the Lawrence Hall of Science museum and include:

- Mirror Mirror: A facial expression recognizer that identifies emotional states (happy, anger, fear, sad, disgust and surprise) through vision inputs. The virtual human then guides the visitors in understanding how machine vision identifies faces, tracks regions of a face, and classifies expressions.

- Teach Me Silly: Visitors learn to classify their own facial expressions (silly, serious, etc), and are taken through the stages of the machine learning process via an introduction, data collection, model construction, model testing, and reflection.

- Comedy Corner: The Virtual Human tells a joke and uses multimodal behavior analysis (e.g., from vision and speech) to infer if the visitors like the joke. A laughter detector displays the speech spectrogram when a laughter is recognized from speech signals and a smile detector displays the image of a smile recognized from the vision input.

- Control My Face: Visitors have their faces superimposed on a video where a speaker (now with the visitor’s face) makes statements that the young visitors may not agree with, e.g., “I like broccoli.” or “I wish I could go to bed earlier.”

- Let’s Make A Plan: Visitors are guided to set goals (e.g., cleaning up the cafeteria) and design actions (e.g., picking up soda can) for the virtual human. AI planning algorithms then string together a plan for the virtual human to achieve the goals with the designed actions.

After the Virtual Humans exhibition was installed at the Lawrence Hall of Science, researchers continued to measure and draw conclusions from visitor interactions. This enabled researchers to produce theoretical and practical advances in helping the general public develop an understanding of AI capability and ethics; advance knowledge around how young learners develop knowledge about AI; then formulate design principles for creating collaborative learning experiences in informal settings.

Findings have been published at conferences such as Computers in Education, Interaction Design and Children, and AI in Education, and will continue to be shared with the community in Q4 2025.

Next Steps

After the success of the exhibition, the team is planning to further disseminate the exhibit activities to other museums. Additionally, the team plans to expand the activities to support more in-depth learning in other informal learning spaces, for example, after school activities, summer camps.

This material is based upon work supported by the National Science Foundation under Grant No. 2116109. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.