Background

Bidirectional Reflectance Distribution Functions (BRDFs) are essential for achieving realistic rendering in computer graphics, particularly in applications like battlefield simulation and training. By modeling surface properties such as diffuse, specular, and glossy reflections, BRDFs enhance immersion and realism. Beyond computer graphics, BRDF models play a crucial role in remote sensing by analyzing how surfaces reflect sunlight and electromagnetic radiation, aiding in object tracking and environmental analysis. Additionally, in neural rendering, reducing the elapsed time between simulation input and visual output is critical for improving user experience. Faster rendering minimizes simulation sickness and enables the potential for remote rendering, making BRDF optimization an important factor in virtual simulation technologies.

Objectives

Our goal in BRDF material editing research is to create a powerful yet accessible way to modify materials in PBR-based neural rendering. We’re developing a flexible and intuitive framework that lets users generate diverse and realistic reflectance maps—like albedo, specular, and surface normals—for any object. By keeping the process efficient and cost-effective, we aim to make high-quality material editing easier and more accessible for everyone in the field.

In material analysis, our goal is to develop an AI-based framework for estimating and analyzing the materials of input objects. This technology will enhance the accuracy of remote sensing, object detection, and tracking, providing more precise and reliable material insights.

Results

We’ve developed a novel BRDF capture algorithm capable of capturing the BRDF of complex objects, enabling our Light Stage to support a wider range of material classes. Our BRDF authoring framework generates high-quality materials for any given object. Additionally, we built and processed a comprehensive material database containing approximately 200 objects across 12 distinct classes, enhancing the accuracy and diversity of material representation in rendering applications.

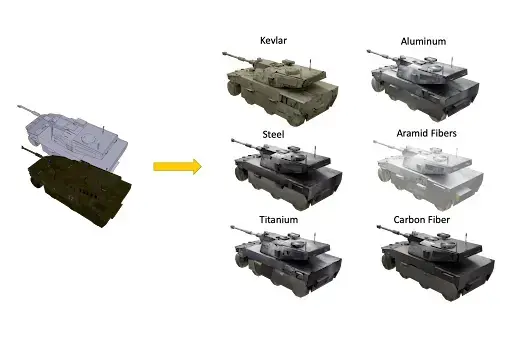

Image: Results of our material authoring tool. Our model accepts user text input to generate matching materials for 3D objects, enabling efficient camouflage switching during soldier training and camouflage design.

Beyond material generation, we have built a scalable, robust, and efficient architecture for object material analysis and editing in rendering applications. This technology enhances Synthetic Training Environments (STE) by balancing high-fidelity visuals with reduced computational and battery costs, supporting both real-time training (STE Live) and browser-based monitoring (STE TMT). The system’s automation minimizes reliance on skilled personnel, allowing military users to establish routines with minimal setup while safeguarding training information.

Designed for global portability, the system operates with simple inputs—text, a processing unit, and a deployment device (VR/AR headset, phone, or computer)—making it adaptable to various onsite applications. Additionally, this approach paves the way for further research in visually-based fields such as target identification, recognition, and rapid visual/numerical feedback for camouflage design.

For more information, please visit https://vgl.ict.usc.edu/